Gleb Korablev: The Shocking Livestream Suicide & Aftermath

Do we truly understand the digital abyss that separates fleeting online fame from utter despair? The tragic case of Gleb Korablev serves as a stark, unsettling reminder of the internet's double-edged sword, where a livestream can become a window into the darkest corners of the human soul.

The internet, a boundless realm of information and connection, has also become a platform for expressing extreme anguish. On a seemingly ordinary morning in October 2019, that boundary was crossed with devastating finality. A young man, identified as Gleb Vyacheslavovich Korablev, chose to broadcast his final moments to the world. The incident, which unfolded on the Russian social media platform VK, quickly morphed from a stream into a nightmare, leaving an indelible scar on the digital landscape. The repercussions of that fateful livestream continue to reverberate, prompting critical conversations about online content moderation, the desensitization of audiences, and the mental health crisis plaguing our digital age.

The event in question centers around a video, often referred to as "1444," which captured Korablev's suicide. The footage, raw and unfiltered, depicted Korablev pointing an assault rifle at his head and pulling the trigger. The graphic nature of the video ensured its rapid spread across various online platforms, triggering a wave of shock, disbelief, and condemnation. Despite efforts to remove the content, mirrors and copies proliferated, turning the tragedy into a macabre spectacle. The delayed response from platforms like YouTube further fueled the controversy, raising questions about the efficacy of their content moderation policies.

| Category | Information |

|---|---|

| Full Name | Gleb Vyacheslavovich Korablev (Глеб Вячеславович Кораблев) |

| Date of Death | October 17, 2019 |

| Place of Death | Russia (likely) |

| Social Media Platform | VK (VKontakte) |

| Video Title/Identifier | Often referred to as "1444" |

| Cause of Death | Suicide by assault rifle |

| Occupation | Director and Writer (known for video 1444 (2019)) |

| Additional Notes | The incident was a livestreamed suicide. The video sparked controversy regarding content moderation on social media platforms. |

| Reference | Gleb Korablev - IMDb |

The dissemination of "1444" highlights the challenges of online content moderation in the age of social media. The sheer volume of user-generated content makes it virtually impossible for platforms to effectively monitor every post and stream. Algorithms designed to detect and remove harmful content often struggle with nuances and contextual understanding, leading to both false positives and, as in the case of Korablev's suicide, tragic oversights. The delay in removing the video from platforms like YouTube underscores the limitations of these systems and the need for more proactive and responsive moderation strategies.

Beyond the technical challenges, the proliferation of such content raises profound ethical questions. The ease with which graphic and disturbing material can be accessed and shared online contributes to a desensitization effect, particularly among younger audiences. Constant exposure to violence and trauma can erode empathy and normalize harmful behaviors. The spread of "1444" also raises concerns about the potential for copycat suicides, as individuals struggling with mental health issues may be influenced by the graphic depiction of self-harm. This phenomenon, often referred to as the "Werther effect," underscores the importance of responsible reporting and content moderation to mitigate the risk of contagion.

The aftermath of Korablev's suicide also saw the emergence of online communities dedicated to his memory, some of which became breeding grounds for morbid curiosity and insensitive commentary. While some users expressed genuine sympathy and sought to understand the circumstances leading to his tragic decision, others engaged in ghoulish speculation and shared leaked screenshots from the livestream. This duality reflects the complex and often contradictory nature of online interactions, where genuine grief and support can coexist with voyeurism and exploitation.

The incident prompted a renewed focus on mental health awareness and suicide prevention. Mental health advocates emphasize the importance of early intervention, destigmatization, and accessible resources for individuals struggling with suicidal ideation. The online community can play a crucial role in identifying and supporting individuals at risk, but it also requires a collective commitment to responsible online behavior and a rejection of harmful content. Platforms must prioritize the mental well-being of their users and invest in resources to promote positive online interactions and connect individuals with mental health support.

The case of Gleb Korablev is a stark reminder of the real-world consequences of online actions. It underscores the need for a more nuanced understanding of the digital landscape, one that acknowledges both its potential for connection and its capacity for harm. The tragedy also calls for a collective effort to promote responsible online behavior, prioritize mental health, and hold platforms accountable for the content they host. The internet is a powerful tool, but it must be wielded with care and a deep sense of responsibility. The memory of Gleb Korablev should serve as a catalyst for change, inspiring a more compassionate and ethical online environment.

The challenges in content moderation are multifaceted. While AI-powered algorithms can automate some of the detection processes, they often struggle with context, sarcasm, and evolving trends in harmful content. Human moderators are essential for nuanced decision-making, but they face burnout and emotional distress from constant exposure to disturbing material. Striking a balance between automation and human oversight, while ensuring the well-being of moderators, is a key challenge for social media platforms.

The responsibility for preventing the spread of harmful content extends beyond platforms to individual users. Promoting media literacy, critical thinking skills, and responsible online behavior is essential for empowering individuals to discern credible information from misinformation, and to report harmful content when they encounter it. Educational initiatives, public awareness campaigns, and collaborations between platforms, educators, and mental health organizations can contribute to a more informed and responsible online community.

The "Werther effect," named after Goethe's novel "The Sorrows of Young Werther," describes the phenomenon of copycat suicides following media reports of suicide. Research suggests that graphic and sensationalized depictions of suicide can increase the risk of vulnerable individuals taking their own lives. Responsible reporting guidelines emphasize avoiding details about the method of suicide, focusing on the underlying causes of distress, and providing information about available resources for mental health support. Social media platforms also have a role to play in mitigating the risk of contagion by removing content that glorifies or encourages suicide.

The online communities that emerge after tragedies like Korablev's suicide can be both supportive and harmful. Some groups provide a space for individuals to grieve, share their experiences, and connect with others who understand their pain. However, other groups may become breeding grounds for insensitive commentary, ghoulish speculation, and the sharing of graphic content. Moderating these communities effectively requires a sensitive approach that balances freedom of expression with the need to protect vulnerable individuals and prevent the spread of harmful material.

Mental health resources are often underfunded and inaccessible, particularly in underserved communities. Expanding access to mental health services, reducing stigma, and promoting early intervention are essential for preventing suicide. Telehealth services, online support groups, and crisis hotlines can provide immediate assistance to individuals in distress. Collaboration between healthcare providers, community organizations, and social media platforms is crucial for creating a comprehensive network of support for mental health.

The incident involving Gleb Korablev highlights the need for a multi-faceted approach to online safety and mental health. Social media platforms must invest in more effective content moderation strategies, promote responsible online behavior, and prioritize the well-being of their users. Individuals must develop critical thinking skills, report harmful content, and support those in distress. Governments must invest in mental health services and promote policies that protect vulnerable individuals. Only through a collective effort can we create a safer, more compassionate, and more ethical online environment.

The legal framework governing online content moderation varies significantly across countries, creating challenges for platforms operating globally. Some countries have strict laws against hate speech, incitement to violence, and the promotion of suicide, while others prioritize freedom of expression. Navigating these differing legal landscapes and ensuring compliance with local laws is a complex and ongoing challenge for social media platforms. International cooperation and the development of common standards for online content moderation could help to address this issue.

The use of artificial intelligence in content moderation raises ethical concerns about bias, transparency, and accountability. AI algorithms are trained on data sets that may reflect existing biases, leading to discriminatory outcomes. The lack of transparency in how these algorithms operate makes it difficult to assess their fairness and accuracy. Establishing clear ethical guidelines for the development and deployment of AI in content moderation is essential for ensuring that these systems are used responsibly and do not perpetuate harm.

The mental health of content moderators is often overlooked, despite the fact that they are constantly exposed to graphic and disturbing material. Moderators experience high rates of burnout, anxiety, and depression. Providing adequate support, training, and mental health resources for content moderators is essential for protecting their well-being and ensuring the effectiveness of content moderation efforts. Some platforms are experimenting with strategies such as limiting exposure to graphic content, providing access to therapy and counseling, and offering regular breaks to help moderators cope with the emotional toll of their work.

The debate over online anonymity is complex, with arguments on both sides. Anonymity can protect whistleblowers, activists, and individuals who fear retaliation for expressing their views. However, it can also enable harassment, abuse, and the spread of misinformation. Finding a balance between protecting anonymity and holding individuals accountable for their online actions is a key challenge for policymakers and platform administrators. Some platforms are experimenting with strategies such as requiring users to verify their identity or providing tools for reporting anonymous harassment.

The role of parents and educators in promoting online safety and responsible behavior is crucial. Parents need to have open conversations with their children about online risks, teach them how to identify and report harmful content, and monitor their online activity. Educators can integrate media literacy and digital citizenship into their curriculum, teaching students how to evaluate sources of information, think critically about online content, and behave responsibly online. Collaboration between parents, educators, and platforms is essential for creating a safe and supportive online environment for young people.

The use of deepfakes and other forms of manipulated media poses a growing threat to online safety and trust. Deepfakes can be used to spread misinformation, damage reputations, and even incite violence. Developing tools and techniques for detecting and debunking deepfakes is essential for protecting individuals and society from the harms of manipulated media. Social media platforms also have a responsibility to remove deepfakes that violate their policies or pose a threat to public safety.

The issue of online radicalization is a complex and challenging problem. Extremist groups use social media to recruit new members, spread propaganda, and incite violence. Countering online radicalization requires a multi-faceted approach that includes removing extremist content, disrupting online networks, and providing counter-narratives. Collaboration between law enforcement, intelligence agencies, and social media platforms is essential for identifying and addressing online radicalization threats.

The spread of misinformation and disinformation online is a major threat to democracy and public health. Misinformation can influence elections, undermine trust in institutions, and discourage people from taking necessary precautions during public health crises. Countering misinformation requires a combination of fact-checking, media literacy education, and platform policies that promote accurate information. Social media platforms also have a responsibility to label or remove false or misleading content that could cause harm.

The digital divide, the gap between those who have access to technology and those who do not, exacerbates inequalities and limits opportunities for marginalized communities. Bridging the digital divide requires investments in infrastructure, affordable internet access, and digital literacy training. Ensuring that everyone has access to the benefits of technology is essential for creating a more equitable and inclusive society.

The future of online safety and mental health depends on a collective commitment to responsible innovation, ethical behavior, and social responsibility. Social media platforms must prioritize the well-being of their users, invest in effective content moderation strategies, and promote positive online interactions. Individuals must develop critical thinking skills, report harmful content, and support those in distress. Governments must invest in mental health services, promote digital literacy, and protect vulnerable populations. Only through a concerted effort can we create a digital world that is both empowering and safe for everyone.

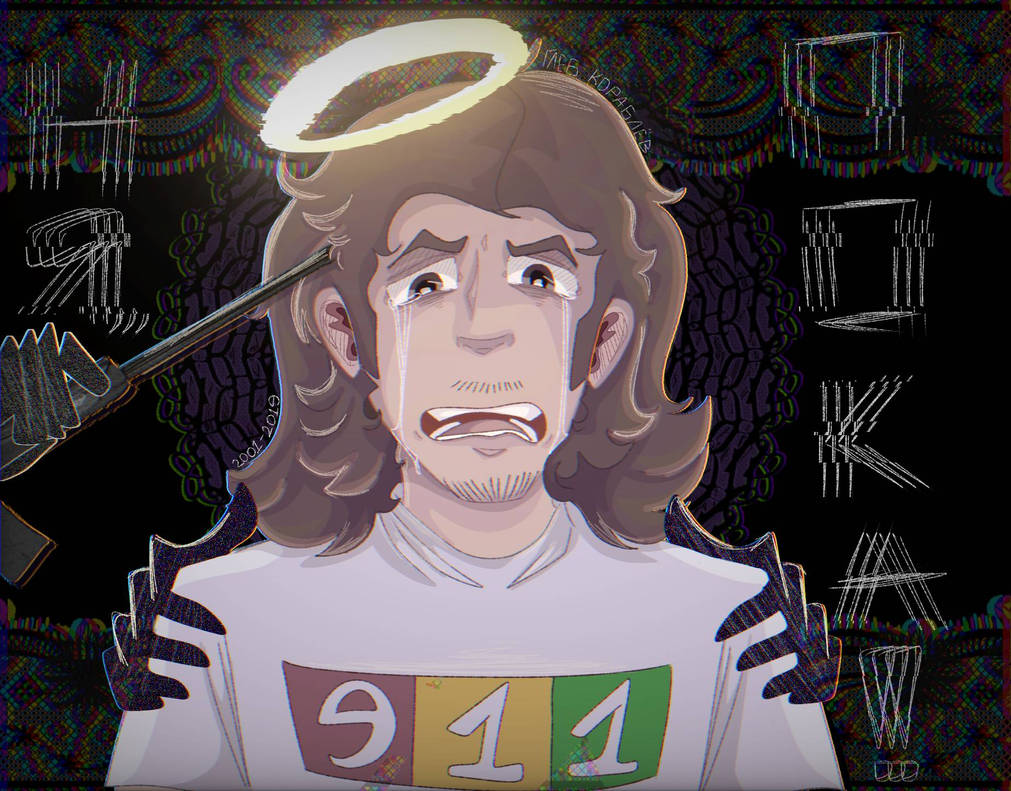

3 Gleb Korablev Pics (No PN18 Watermark) kgb2001

GLEB KORABLEV by MikeSmithIThink on DeviantArt

3 Gleb Korablev Pics (No PN18 Watermark) plohienovostipics